The Why#

Let me tell you a story that will help you understand why this is an important concept.

When I first started out in IT and a customer would ask for a new server, they would submit an order. This order would ask for a certain CPU type, a certain amount of RAM, storage device, and so on. Once the order had been confirmed and sent to the datacentre an engineer would begin "racking up" (installing) the physical server that would meet the customer's requirements. Racking up a server meant building the server and installing it into a rack, which looks a bit like this:

(Janwikifoto, CC BY-SA 3.0, via Wikimedia Commons)

It took anywhere between 6-16 hours to provision 2-3 servers in this manner. But the important thing is the operating system. After the physical system has been installed into the rack (that's what the engineers above are putting the server into) the operating system is installed and the customer is given the login details so they can install software and make the server work for them.

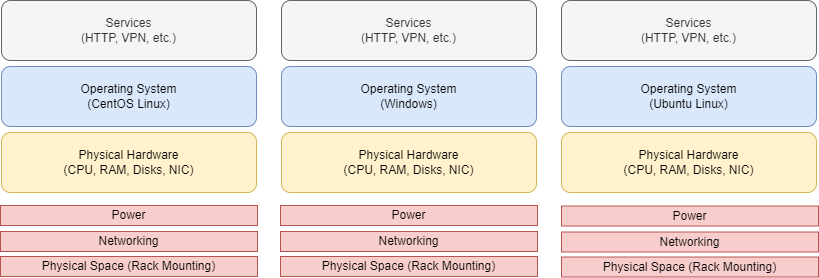

Visually this classic approach to physical infrastructure looks like this:

There's a problem here that's not so obvious: the entire physical server has only one OS installed on it and is likely to be used for just one purpose. That seems quite wasteful, doesn't it? So the customer might choose to run multiple things inside the same OS, but theres a catch. While it's more than possible for the customer to run multiple software suites, what if one has a security issue? If it's exploited, then the entire system is compromised. What if one piece of software conflicts with another in some way? Things can quickly become complicated.

Running each piece of software on its own physical piece of hardware is one answer because each one would get its own operating system but that's even more wasteful. Also, each server gets its own power supply, networking supply, and takes more physical space.

This is where virtualization steps in, as I'm sure you've guessed.

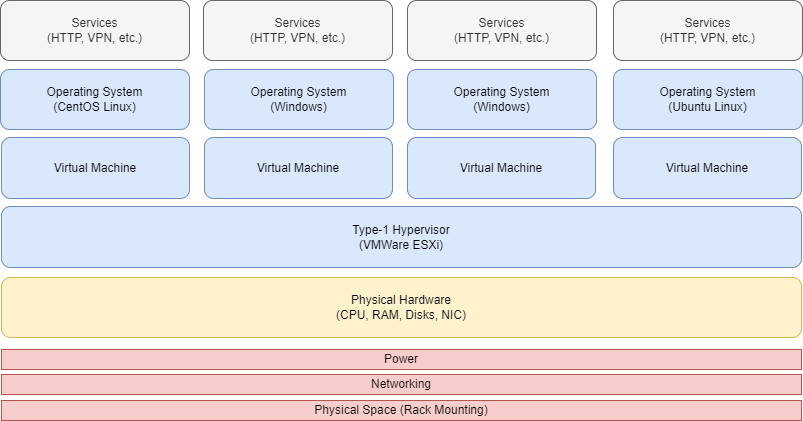

Going back to the original server the customer asked for, instead of installing a normal operating system we can instead install what's called a hypervisor. We'll cover these later on, but what this essentially means is we can now create virtual servers on the one physical server. On each virtual server we can install an operating system. And once installed we can use the OS to install whatever software we like. These virtual machines are called "guests".

Visually we get this now:

Now here's a question for you: how long do you think it takes to create and run a virtual server? Maybe an hour? Maybe 2-3 hours? Actually, it can be as quick as a few seconds in some cases but at most minutes. Not hours. Minutes. We've not only made it possible to efficiently use the hardware we have but we've now made it possible to create new servers, over and over, in minutes. That's quite an improvement over hours.

Now we have used one physical server to do multiple things and what's more is if one server is exploited - perhaps because of weak software security or a bad configuration - then the other systems are completely isolated (virtual servers are quite difficult to "escape") and so they're not affected. They remain safe.

There's also something else that the customer gains from using a single physical server to run multiple operating systems and workloads...

Efficiency#

Virtualization is very efficient. But why, though?

Our fictional customer, above, begins by uses each operating system to run an independent server without having to run a new, physical server for each one. That's super efficient from a hardware perspective, so it lowers literal capital costs (money.)

What's really efficient about this is the utilisation of the server.

Let's say our customer creates five virtual servers on the one physical host. If they run web servers on each virtual server, and each one uses 10-15% of the resources constantly, then the (physical) server's total utilisation will be roughly 75% constantly. That's actually pretty perfect because there is room for "burst" traffic (a big, temporary surge in traffic to one or two sites) and maybe even room to run another server.

Imagine if the customer ran each of these five web servers on their own hardware? That would be five physical servers each being utilised 10-15% each 24/7. That's an extremely poor return on your investment as a business and just a waste of resources, not to mention the impact on the environment.

It's the fact that we can slice up a piece of physical infrastructure into virtual "pieces" and have them all utilising the server's resources more efficiency that has led to virtualization giving birth to the concept of Cloud computing the massive providers we see today such as AWS and Azure.